As consumers, we often find ourselves scrutinizing the produce section of our local grocery store, pondering over the seemingly endless choices of fruits and vegetables. The allure of technology promises to enhance this experience, spurring a vital question—can machine learning be harnessed to predict food quality as accurately as seasoned human judgement? Recent research conducted by a team at the Arkansas Agricultural Experiment Station suggests that this may one day become a reality, bridging the gap between human perception and machine intelligence in food evaluation.

The intricate task of assessing food quality has traditionally relied heavily on human observers, whose perceptual abilities are shaped by an intricate understanding of sensory inputs. However, a significant drawback to human judgment is the variability dictated by environmental factors, such as lighting conditions, which can skew the quality assessments of items like fruits and vegetables. The study led by Dongyi Wang, an assistant professor specializing in smart agriculture and food manufacturing, aimed to explore how machine-learning models can mitigate these inconsistencies by drawing upon data derived from human perception.

Critically, this research underscores a fundamental aspect of machine learning: it is only as reliable as the data it processes. The study found that incorporating human evaluations of food quality improves the accuracy of computer-based predictions considerably. By addressing the common pitfalls faced in solo machine evaluations, notably the absence of context regarding illumination and its effect on color perception, the team presented a compelling argument for triangulating human assessments with machine learning models.

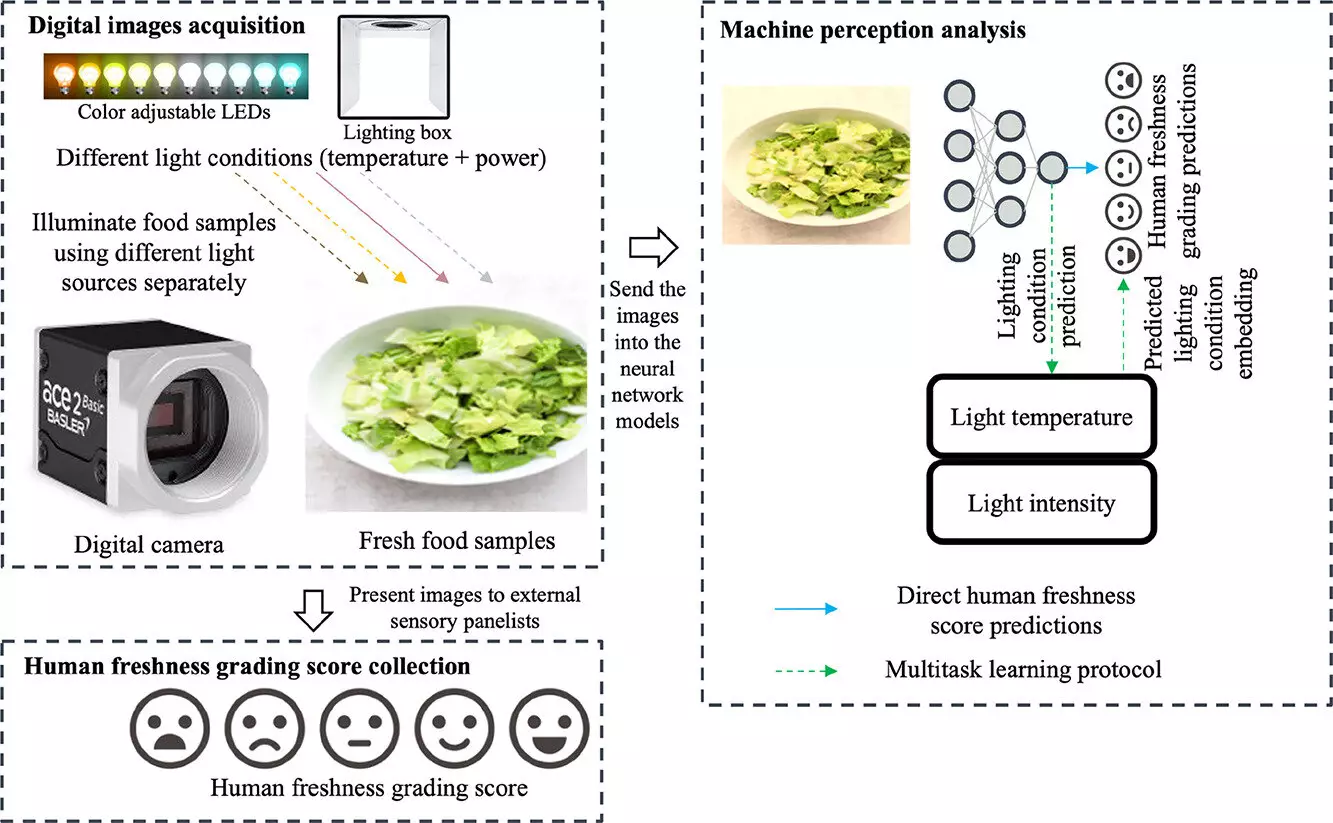

For their research, the team utilized Romaine lettuce as a case study, conducting sensory tests in varied lighting conditions to capture human perceptions of freshness. Participants, who were carefully selected to eliminate variables such as color blindness, were challenged to grade the quality of lettuce images over a series of days. Each assessment was executed with a keen awareness of how lighting could drastically impact their views on freshness.

Utilizing a dataset of 675 images, researchers tested different machine learning models to evaluate how well they could mimic human perception. This multifaceted approach took into account varying degrees of browning and lighting—from cool blue tones to warm orange—allowing the machine learning systems to learn the subtleties of human assessment.

The findings were significant: when trained with human data, machine learning models could decrease their prediction errors by approximately 20% compared to models that disregarded human variability. This performance leap advocates for the integration of human-derived insights into training datasets, proposing that such methods could enhance various machine vision applications.

The study reveals tremendous potential for machine learning algorithms to not only match but actually improve upon human judgment in food quality evaluations. The ramifications for grocery stores and food processing facilities could be profound. For instance, retailers could implement systems that utilize these advanced machine-learning models to evaluate inventory quality dynamically, ensuring that only the best produce is displayed for consumers. Moreover, manufacturers could integrate this technology to streamline processes in food safety and quality assurance, driving down waste and improving overall customer satisfaction.

The methodology explored in this research could extend beyond the realms of food quality evaluation. As mentioned by Wang, the principles of training machine vision systems using human perception insights could be universally applied, from assessing jewelry aesthetics to consumer packaged goods, marking a pivotal shift in how various industries interpret visual data.

Despite the promising findings, challenges remain in transferring the technology from research settings to practical application. The complexity of real-world environments, where countless variables can influence perception and quality assessments, presents a substantial barrier. Additionally, ensuring the replicability of these results across different types of food and environmental contexts will require further innovative research.

Moreover, ongoing collaboration between machine learning experts, sensory scientists, and industry practitioners will be essential for developing user-friendly applications that can be integrated seamlessly into existing workflows.

While machine learning technologies offer exciting advancements in food quality prediction, they require the nuanced understanding of human perception to truly excel. By combining human insight with machine efficiency, future solutions promise to revolutionize the way we assess and select quality food products. As the research evolves, so too does the potential for a new era in food technology—one where human and machine work hand in hand to make our grocery shopping experiences smarter and more reliable.