On August 1, a pivotal moment in technology governance occurred when the European Union’s Artificial Intelligence Act (AI Act) officially came into force. This legislation establishes a regulatory framework designed to manage the capabilities and limitations of artificial intelligence (AI) within the EU. The act comes in response to growing concerns over the potential risks associated with AI, particularly in sensitive domains such as healthcare, employment, and education. A team of researchers, led by Holger Hermanns from Saarland University and Anne Lauber-Rönsberg from Dresden University of Technology, has begun assessing the implications of this legislative move, particularly for software developers and AI practitioners.

The fulcrum of the discussion surrounding the AI Act revolves around its impact on programmers—the individuals tasked with creating the AI systems subject to regulation. Many developers are understandably curious about how this new legal framework will alter their daily routines and practices. Hermanns noted that a common inquiry among programmers is, “What do I actually need to know?” This question indicates a significant gap between legal theory and practical application, as much of the legislation (144 pages, to be exact) is not designed for quick comprehension by busy software developers.

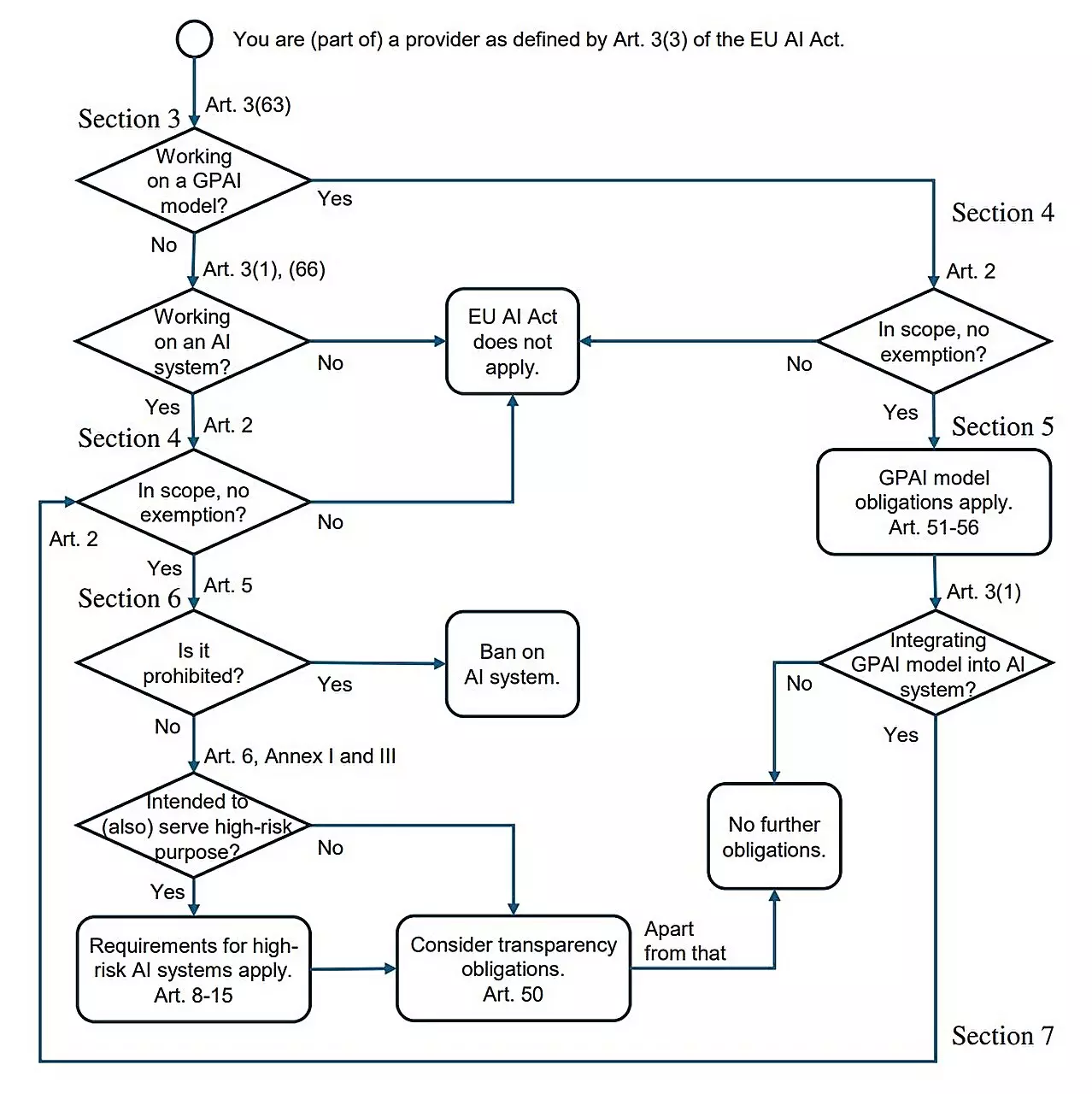

The researchers aim to translate the complexities of the AI Act into actionable insights for developers, as discussed in their forthcoming research paper, “AI Act for the Working Programmer.” The findings suggest that while the AI Act introduces substantive regulations, the majority of developers may not experience a marked change in their workflow unless they are involved in high-risk AI applications.

One of the AI Act’s core objectives is to delineate between high-risk and low-risk applications. High-risk systems may include AI tools used for recruitment, credit scoring, medical diagnostics, and educational admissions. These applications are rigorously scrutinized under the new legislation due to their potential to cause harm or perpetuate discrimination. For instance, an AI system designed to assess job applicants must comply with the AI Act’s stipulations when it is put into use or marketed.

On the contrary, lower-risk applications—like those used for gaming or spam filtering—are relatively unaffected by the AI Act. Hermanns captures this distinction clearly: applications with limited social consequences are exempt from the stringent regulations imposed on high-risk systems. This smart segmentation allows for innovation to continue flourishing in less consequential areas while ensuring robust oversight where it matters most.

Compliance Requirements for High-Risk Systems

For developers of high-risk AI systems, the AI Act imposes several essential compliance requirements. One of these mandates is that developers certify the suitability of their training data to ensure that it does not generate discriminatory outcomes. Hermanns emphasizes the need for representational balance in training datasets to mitigate bias, underscoring the ethical duty of programmers in this domain.

Moreover, there is a necessity for comprehensive documentation akin to traditional user manuals, detailing how these high-risk AI systems function. This requirement not only aids in user understanding but also facilitates accountability, enabling stakeholders to track the sequence of events within the AI’s decision-making processes. The introduction of logging mechanisms, akin to the black boxes found in aircraft, aims to promote transparency and retrospective analysis in cases of alleged harm or failure.

Challenges and Opportunities Ahead

While the AI Act marks a significant step towards ensuring the ethical use of technology, it presents a set of challenges. Developers may face a steep learning curve as they adapt to these new compliance requirements. However, the legislation also represents an opportunity for ethical innovation, encouraging developers to create systems that prioritize user safety and fairness.

Hermanns and his associates have expressed a largely positive view of the AI Act, perceiving it as a foundation for responsible AI governance at a continental level. Moreover, they assert that the act will not stifle Europe’s competitive edge in international AI advancements, as it allows broad latitude for research and development activities.

The European Union’s AI Act embodies a crucial balance between safeguarding societal concerns and fostering technological innovation. By delineating high-risk from low-risk AI applications and laying out clear compliance requirements, the legislation seeks to create a safer digital landscape. As programmers grapple with the nuances of this new regulatory environment, embracing the principles of transparency and ethical responsibility will not only enhance user trust but also ensure the long-term viability of AI technologies within society. As this legislation takes root, the evolving intersection of technology and law will undoubtedly shape the future of artificial intelligence in the European Union.