Artificial intelligence-driven advancements have transformed the technological landscape, enabling innovations in every sector from healthcare to finance. However, beneath these impressive strides lies a daunting challenge: the insatiable energy appetite of the infrastructure supporting AI. Data centers, the backbone of our digital world, are enormous energy consumers, often overlooked in their environmental impact. In 2022, they accounted for over 4% of U.S. electricity consumption, with nearly half of that energy dedicated solely to cooling systems. As AI becomes more embedded in daily life, these demands are poised to escalate exponentially. The current cooling methods—principally fans and liquid cooling—are reaching their limits, making a revolutionary shift inevitable if we aim for sustainable growth.

Limitations of Traditional Cooling Solutions

Current data center cooling techniques, while effective in the short term, are increasingly proving inadequate in terms of energy efficiency. Air cooling, relying on fans to circulate cool air, consumes substantial power and offers only moderate heat dissipation. Liquid cooling—using water or dielectric fluids—improves efficiency but still requires pumps, coolant circulation, and associated infrastructure that draw significant energy. These methods, although tried-and-true, pose scalability challenges, especially as server densities grow denser and computational tasks become more intensive. As the heat load on servers rises, so does the need for more sophisticated, energy-conscious cooling solutions capable of keeping pace without further burdening power grids or escalating carbon footprints.

Innovative Two-Phase Cooling: A Paradigm Shift

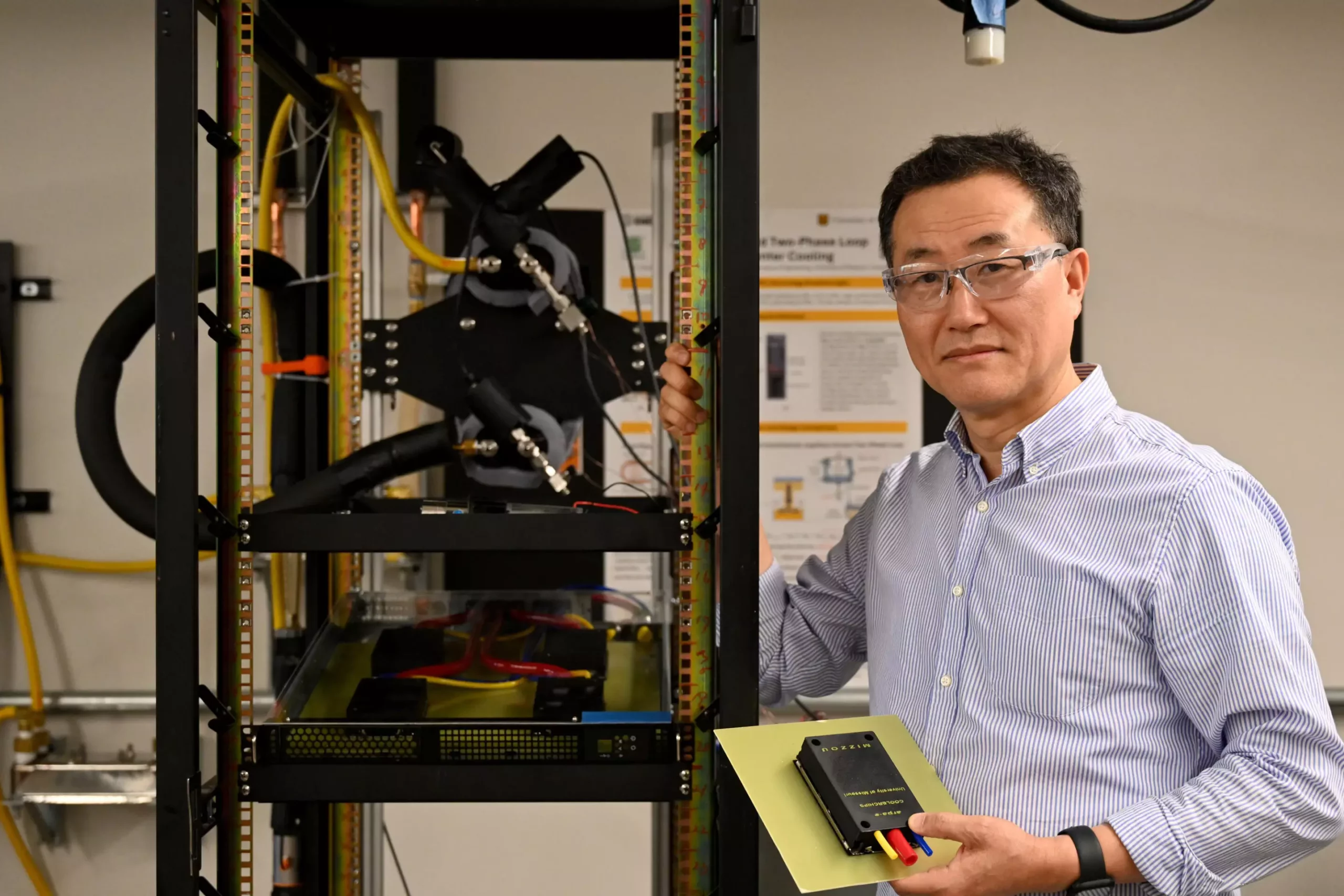

Enter the pioneering work of Professor Chanwoo Park and his team at the University of Missouri, who are championing a transformative approach: a two-phase cooling system leveraging phase change principles. This innovative method involves a liquid that, upon contact with the server’s hot surfaces, evaporates into vapor, effectively siphoning away heat. The process mimics boiling but is engineered within a meticulously designed porous layer that maximizes surface contact and thermal transfer. The beauty of this system is its potential for passive operation—requiring no energy when temperature conditions are low—and only engaging active pumping during peak heat loads, minimally increasing energy consumption.

This approach drastically reduces thermal resistance, meaning heat is removed more efficiently than traditional methods. The vaporized coolant can then condense back into liquid form, ready to repeat the cycle. Such a system not only promises superior cooling performance but also aligns perfectly with the need for energy-efficient, scalable solutions as data centers continue to grow in both size and complexity.

Implications for the Future of AI and Sustainable Technology

The significance of Park’s cooling innovation extends well beyond the laboratory. As AI-driven technologies become ubiquitous, the demand for data processing power will skyrocket, and current cooling systems will be stretched beyond their limits. The development of a passive or low-energy cooling architecture offers a pathway to significantly reduce the ecological footprint of data centers. This is not just a technical improvement—it’s an essential strategic move toward sustainable growth in the digital age.

Furthermore, the integration and rapid deployment of this cooling technology could reshape the industry’s approach to data center design, making efficient, high-performance infrastructure more accessible around the globe. The work dovetails with broader initiatives such as the Center for Energy Innovation, which aims to harness interdisciplinary expertise to tackle pressing energy challenges. By prioritizing innovative cooling solutions, researchers like Park are demonstrating that technological progress need not come at the expense of environmental sustainability.

As the digital economy expands, especially with AI at its core, it’s evident that the future of computing depends on breakthroughs like these. If implemented on a wide scale, such systems could drastically cut energy costs, reduce greenhouse gas emissions, and enable more sustainable technological advancement. This isn’t just about keeping servers cool—it’s about cooling down the entire planet’s carbon load while powering the next wave of innovation.