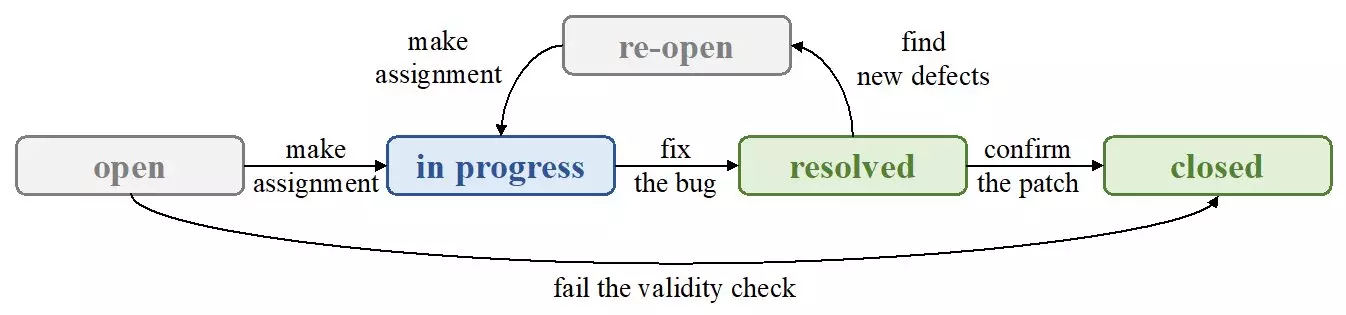

In software development, automating the bug assignment process is crucial for streamlining workflows and enhancing efficiency. While engineers often rely on textual bug reports to identify issues, these documents are not without their complications. Sounds simple, right? Yet, the textual complexities often hinder effective bug localization, creating a bottleneck in the debugging process. Classic Natural Language Processing (NLP) techniques, despite their extensive application, have shown limitations, particularly when the information gleaned falls prey to the noise that lurks in unstructured text.

Recent Research Redefining Priorities

A recent study led by researcher Zexuan Li provides important insights into the nature of bug assignment, focusing on the comparative significance of textual versus nominal features. Through the use of TextCNN—an advanced deep-learning-based NLP model—the research sought to ascertain whether improvements in NLP techniques could elevate the accuracy of bug assignment performance. Surprisingly, findings revealed that textual features did not outstrip their nominal counterparts, challenging the conventional wisdom that places high value on textual analysis.

This research pushes the boundaries of understanding by probing what really matters when assigning bugs. It seems that the prominence of textual data in automated bug assignment is perhaps overestimated. Instead, the identified nominal features—essentially the preferences of developers—hold substantial sway in enhancing the accuracy of bug assignments.

Methodological Insights into Feature Importance

Li’s team did not merely stop at establishing the limitations of textual features; they articulated their findings through a statistical lens. By employing a rigorous wrapper method and a bidirectional strategy, the researchers dynamically trained a classifier to determine the significance of various features. Their innovative approach allowed them to systematically evaluate which features exerted influence over bug assignment outcomes.

The results were compelling. The nominal features—characteristics indicative of developer preferences—not only demonstrated reliability but yielded competitive results, achieving accuracy rates between 11% and 25% while bypassing textual analysis altogether. This revelation marks a pivotal shift in perspective, emphasizing the importance of understanding developer tendencies over desiring clarity from textual noise.

The Road Ahead: Integrating Knowledge Graphs

Given these observations, researchers are called to action. Future explorations might benefit significantly from integrating knowledge graphs that connect influential features with descriptive terms, effectively creating a richer embedding for nominal features. This integration could pave the way for more intelligent and adaptive systems that leverage developer insights without becoming mired in the complexities of unstructured text.

The implications of this research are profound. By reshaping our approach to bug assignment, we can not only free developers from cumbersome text-driven analyses but also empower them through the reinforcement of their preferences. Ultimately, this represents a paradigm shift in how we think about automated processes within software development, opening the door for innovations that enhance both precision and productivity in tackling persistent bugs.