Speech emotion recognition (SER) has emerged as a pivotal area of research, especially with the advent of deep learning technologies. Applications range from enhancing user experiences in virtual assistants to improving mental health diagnostics through emotional analysis. However, as these technologies evolve, they face significant challenges that threaten their effectiveness and reliability. The potential for misuse and exploitation becomes increasingly urgent, particularly in light of emerging research highlighting vulnerabilities within these systems.

A recent study led by researchers at the University of Milan dived deep into the adversarial vulnerabilities of SER systems, specifically examining the behavior of deep learning models when subjected to different forms of adversarial attacks. These attacks can broadly be classified into two categories: white-box and black-box. This research, published on May 27 in *Intelligent Computing*, systematically evaluated the effects of these two types of attacks on various languages and gender-specific speech samples.

The findings revealed that convolutional neural network long short-term memory (CNN-LSTM) models showcased significant frailty when confronted with adversarially crafted inputs. Such inputs, termed “perturbations,” subtly modify the audio data, causing models to produce incorrect outcomes. This raises serious concerns about the robustness of systems designed to interpret human emotion—an inherently complex and variable phenomenon.

The implications of the study are far-reaching. All forms of adversarial attacks assessed led to a notable dip in the performance of the SER models. The researchers articulated that the ramifications of this susceptibility could be severe, intimating that attackers could exploit these flaws with potentially detrimental consequences. More alarmingly, the research highlighted that in certain instances, black-box attacks, which operate with limited insight into the model’s functions, yielded superior results compared to white-box attacks. This suggests that attackers may effectively breach the system’s defenses without needing extensive knowledge of its internal workings, purely by observing the model’s outputs.

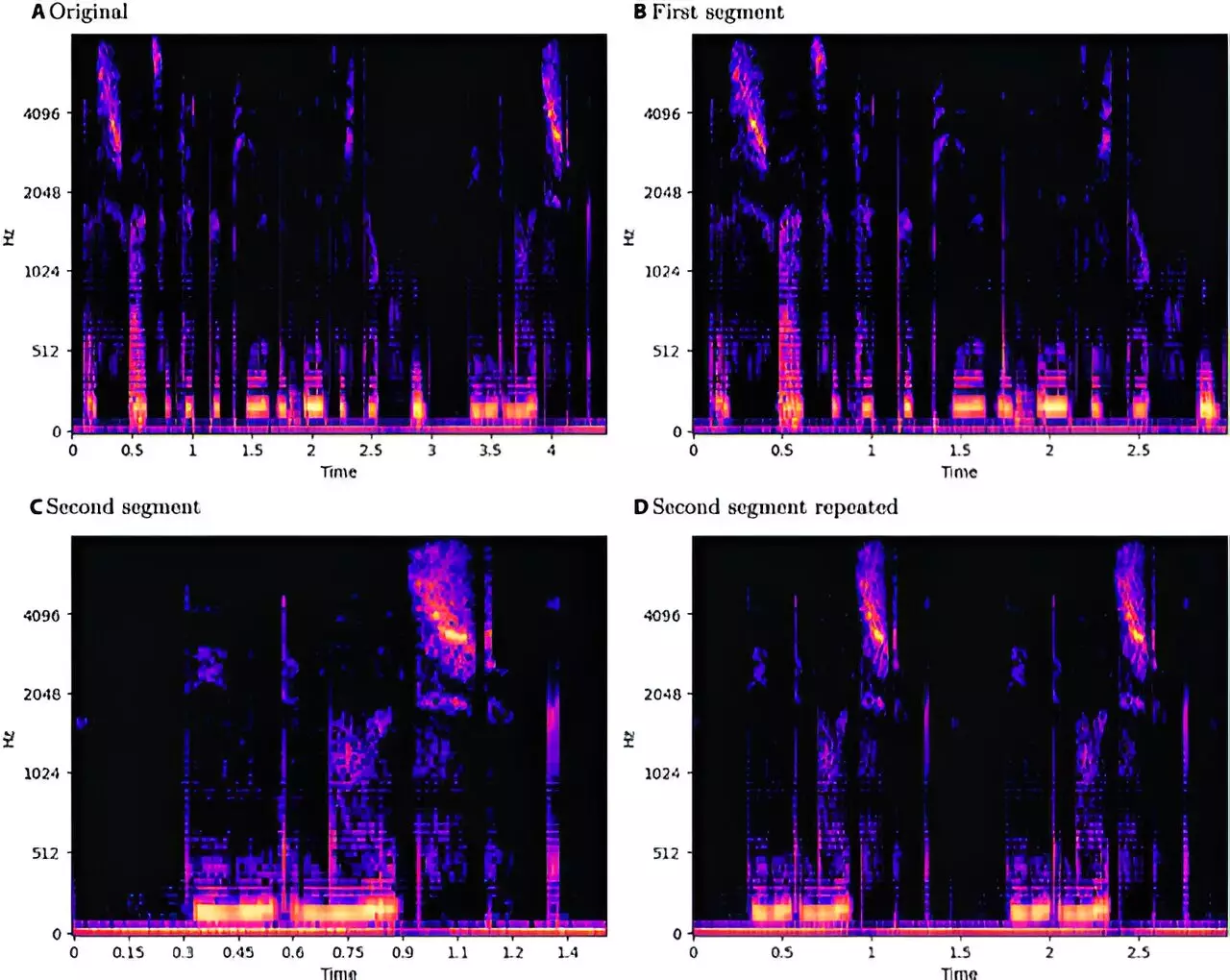

The study utilized multiple datasets, including EmoDB (German), EMOVO (Italian), and RAVDESS (English), to examine the SER models’ vulnerabilities across different languages. Advanced techniques like the Fast Gradient Sign Method and Boundary Attack were employed to simulate various adversarial scenarios. The nuanced approach of utilizing pitch shifting and time stretching for data augmentation reflected the researchers’ commitment to methodological rigor. Interestingly, the research also considered a gender perspective, analyzing how the models performed when interpreting male versus female speech.

The analysis found that while there were minor performance discrepancies based on language, English was notably more susceptible to attacks, while Italian speech showed resilience. The gender-based evaluation suggested a slight edge in male samples, although the differences were largely insignificant. This nuanced understanding of gender and language variability adds depth to the study.

While the identification of vulnerabilities in SER systems might provide would-be attackers with a blueprint for exploitation, the argument for transparency in research is compelling. Sharing such findings enables both defensive and offensive entities to acknowledge existing weaknesses, fostering a more informed and secure developmental landscape. By galvanizing efforts to fortify SER technologies, researchers serve not merely as observers of these vulnerabilities but as active participants in the evolution of more resilient systems.

As the field of speech emotion recognition continues its ascent, the necessity for proactive measures against adversarial threats will inevitably be a point of focus. The future of SER depends not only on the sophistication of the models but also on the robustness of their defenses against a landscape of evolving threats. By embracing collaboration and transparency, the industry can strive for a more secure and trustworthy deployment of these transformative technologies.