Artificial Intelligence, particularly in the realm of language processing, has made significant strides in recent years. However, one persistent challenge remains: the capacity to provide accurate answers when faced with incomplete knowledge. This gap often necessitates a collaborative approach reminiscent of how humans seek assistance when confronted with complex questions. Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have recognized this enduring issue and have begun developing innovative solutions. Their groundbreaking algorithm, Co-LLM, seeks to enhance the collaborative capabilities of large language models (LLMs), allowing them to “phone a friend” in instances where expert knowledge is required.

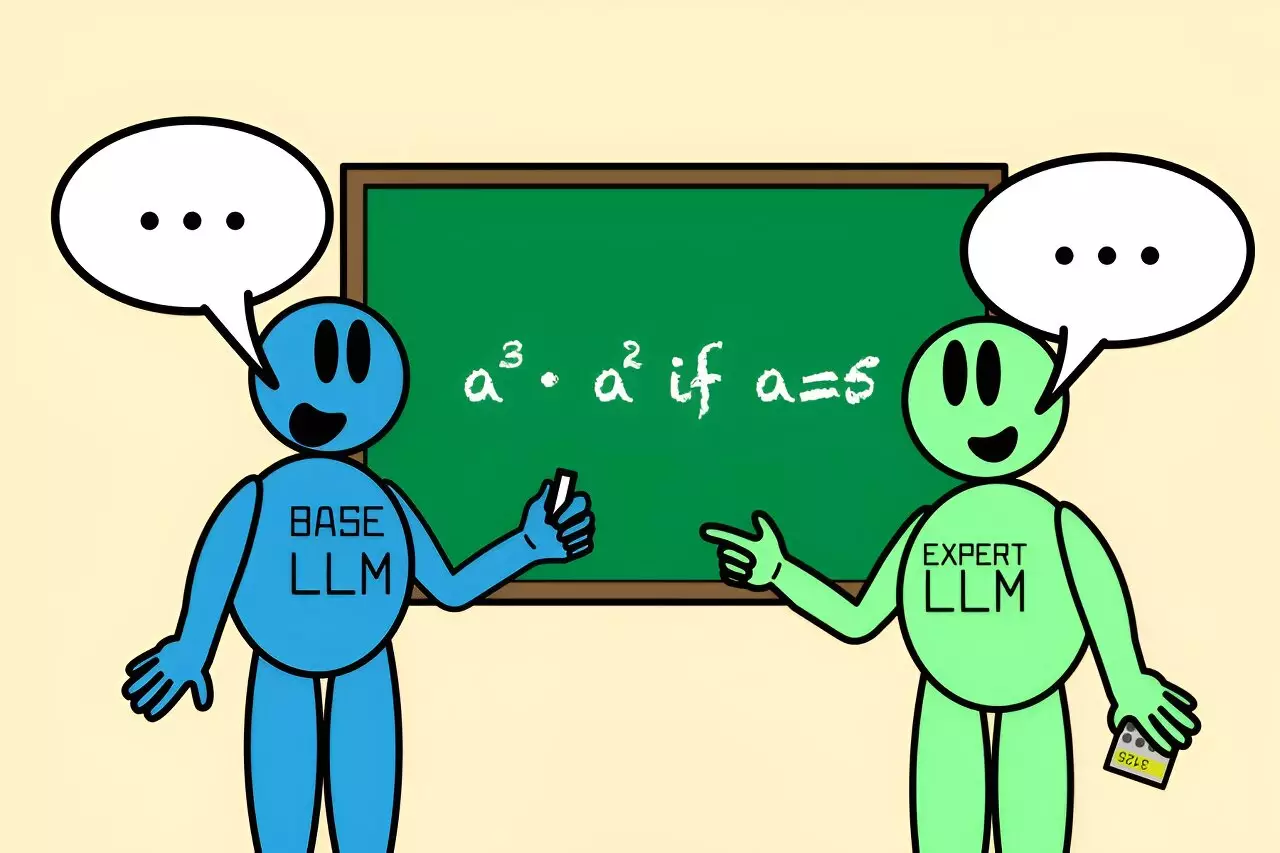

Unlike traditional methods that rely on complex algorithms or extensive datasets to determine when a model should consult a specialized counterpart, Co-LLM adopts a more natural approach. This sophisticated system pairs a general-purpose LLM with a specialized expert model. As the general model generates responses, Co-LLM meticulously evaluates each word, determining potential areas where the expert model could provide enhanced accuracy. In this way, Co-LLM not only boosts the quality of answers given—particularly in domains such as medicine or mathematics—but also promotes efficient processing. The innovative design allows the expert model to be utilized selectively rather than continually, streamlining the overall response time.

A Dynamic Decision-Making Process

At the core of Co-LLM’s operation lies its “switch variable.” Functioning akin to a project manager, this intelligent mechanism helps decide when the general-purpose model requires specialized assistance. As the LLM constructs its reply, the switch assesses each component’s competency, thereby identifying the precise moments when expert contributions are warranted. For instance, if tasked with identifying extinct bear species, the general model can rely on its partner to insert accurate, nuanced information—like the extinction dates of particular species—effectively enhancing the richness of the answer.

The collaborative potential of Co-LLM is profound, enabling the primary model to provide foundational responses while seamlessly integrating expert insights where needed. As Shannon Shen, a leading author of the study and MIT Ph.D. student, articulated, this framework allows models to learn the art of collaboration organically, mimicking the intuition humans demonstrate when they seek expert advice.

Applications and Flexibility of Co-LLM

The versatility of the Co-LLM approach extends beyond casual queries. Researchers have demonstrated its prowess in complex domains, such as biomedicine. By utilizing datasets like the BioASQ medical set, the Co-LLM system comprises a general LLM paired with specialized models trained on extensive medical data. This strategic coupling enables the AI to answer sophisticated biomedical queries with greater precision and reliability. For example, a query about the components of a prescription drug would yield far more accurate results through the integration of a dedicated biomedical model, showcasing Co-LLM’s adaptability.

Furthermore, advancements in mathematical query resolution highlight another critical aspect of Co-LLM’s functionality. When confronted with a challenging math problem, the algorithm demonstrated its ability to self-correct. After an initial miscalculation, Co-LLM recalibrated by consulting a specialized mathematical model, arriving at the correct solution. This exchange between the models illustrates not only the efficiency of collaborative learning but also the potential for reducing error rates significantly compared to traditional, isolated model operations.

Beyond Efficiency: Future Enhancements

While Co-LLM stands as a groundbreaking step forward in AI collaboration, the MIT researchers have their sights set on further refinements. Future iterations may consider implementing a more comprehensive feedback system, allowing the general model to course-correct based on the expert’s responses. This would enable the algorithm to maintain accuracy, even when faced with potential missteps from the expert model.

There is also an intention to keep the models updated with the most current data available, ensuring that users receive answers based on the latest information. Such updates could enhance enterprise applications, where up-to-date data is crucial for informed decision-making. By allowing Co-LLM to draw from both extensive knowledge bases and the ability to reason, its capacity for practical application in real-world contexts would expand significantly.

Colin Raffel, an associate professor at the University of Toronto, poignantly remarked on Co-LLM’s innovative design and its granular approach to decision-making, emphasizing the flexibility it introduces into AI collaborations. As the field of language models continues to evolve, strategies like Co-LLM could redefine how these systems operate, promoting a new era of efficient, accurate, and contextually aware AI interactions.

The introduction of Co-LLM demonstrates a pivotal innovation in how language models can not only function independently but also collaborate effectively, reminiscent of human interactions. As researchers continue to refine this approach, the potential for enhancing both the efficiency and accuracy of AI-generated responses becomes increasingly promising, paving the way for a future where AI-assisted problem-solving is seamless and reliable.