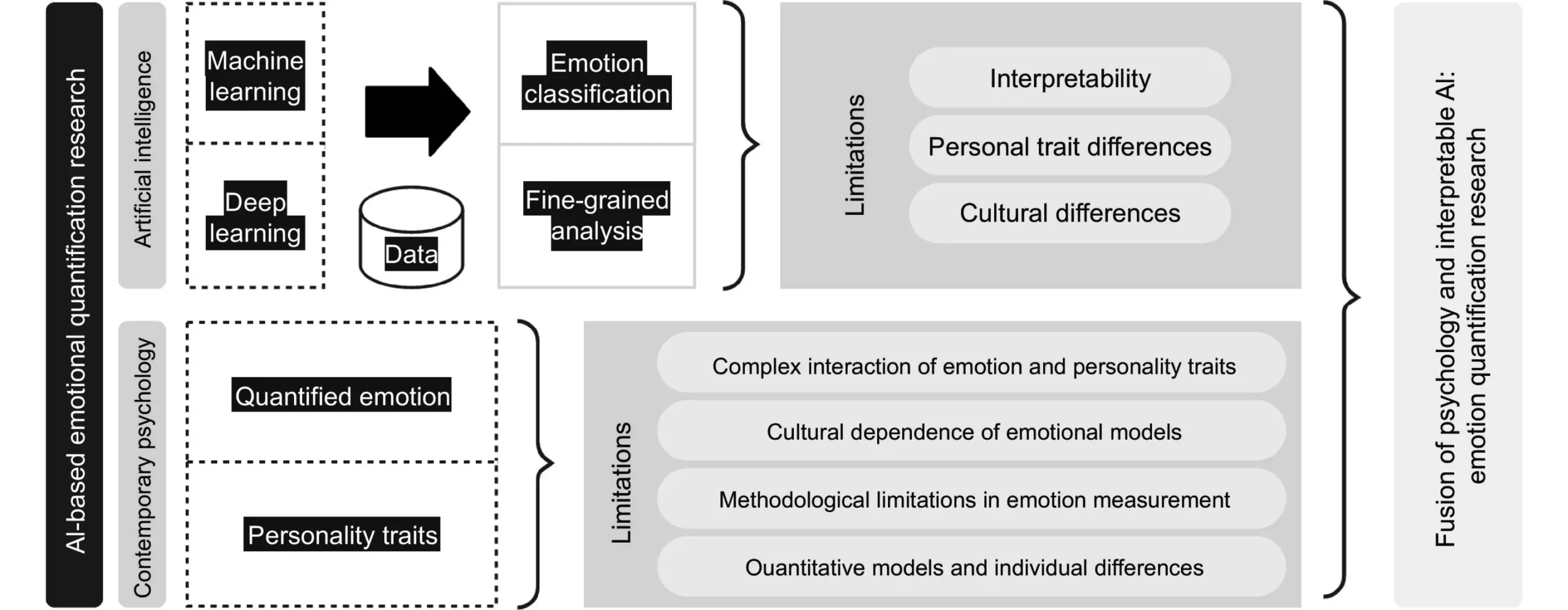

Human emotions are multi-faceted and intricate, making them one of the most challenging aspects of human experience to quantify and understand. Each person possesses a unique emotional landscape shaped by an array of personal, social, and cultural influences. This complexity often results in misunderstandings and misinterpretations, even in face-to-face interactions. In recent years, however, researchers have begun merging traditional psychological frameworks with cutting-edge technology, particularly artificial intelligence (AI), to create a more nuanced understanding of emotional states. These innovations have the potential to redefine how we approach mental health, education, and customer service, transforming them through enhanced emotional comprehension.

The integration of AI into emotion recognition introduces promising methodologies that can enhance the accuracy of emotional assessments. By leveraging machine learning algorithms, AI systems can be trained to identify and interpret a myriad of emotional cues across different modalities. This includes not only facial expressions but also physiological signals such as heart rate variability, skin conductance, and neural activity measured through EEGs. The beauty of AI lies in its capacity to synthesize these diverse sources of data, allowing for a more holistic view of a person’s emotional state.

Feng Liu, a prominent researcher in this domain, emphasizes that the synergy between established psychological practices and AI algorithms can lead to ground-breaking advancements. As emotion recognition technology evolves, it creates opportunities for personalized interactions that can drastically improve user experiences. Whether in healthcare, education, or customer service, this technology can facilitate a deeper understanding of user emotions, leading to service models that are more attuned to individual needs.

Multi-modal Recognition: A Comprehensive Approach

One of the most significant breakthroughs in emotion quantification is the concept of multi-modal emotion recognition. By integrating various perceptual inputs—such as visual cues from facial expressions, auditory signals from tone and speech, and tactile feedback—AI systems can develop a more nuanced interpretation of human emotions. This comprehensive approach not only enriches the data collected but also aids in reducing the likelihood of misinterpretations stemming from reliance on a single source of input. In doing so, technology offers a more robust framework for empathy and emotional intelligence in human-computer interactions.

This multidisciplinary approach necessitates collaboration across several fields, including psychology, neuroscience, and computer science. Liu’s insights suggest that fostering such interdisciplinary partnerships is essential to unlocking the full potential of emotion quantification technologies. The result could vastly improve mental health assessments, enabling practitioners to better understand patient needs and tailor interventions accordingly.

Despite the promising advancements in emotion recognition, ethical considerations must not be overlooked. The utilization of AI in sensitive areas like mental health raises critical questions regarding data privacy and security. As these systems collect potentially sensitive emotional data, robust safeguards are essential to protect individuals’ privacy. Transparency in data handling practices becomes vital, ensuring individuals feel safe and informed about how their information is being used.

In addition to privacy concerns, cultural sensitivity must be a core consideration in developing emotion recognition technologies. Emotions can vary significantly across different cultures, and an AI system that fails to recognize these differences may perpetuate biases. Developers must ensure that their algorithms account for these variances, allowing for a more accurate portrayal of emotional states that respects individual backgrounds.

The Path Forward: A Collaborative Future

As we embrace the intersection of AI and emotional intelligence, we must acknowledge that the journey is only beginning. Researchers and developers must remain focused on creating systems that are not only advanced but also ethical and culturally aware. The promise of AI in accurately understanding human emotions can revolutionize various fields, particularly as mental health becomes an increasingly important societal concern.

Ultimately, the fusion of traditional emotional awareness with modern AI capabilities offers a profound opportunity for evolution in how we assess and respond to human emotions. If harnessed thoughtfully, emotion recognition technologies could create more humane and empathetic spaces in healthcare, education, and beyond, ultimately leading to a deeper understanding of ourselves and one another.