Artificial intelligence (AI) has become an integral part of modern technology, driven by algorithms that demand immense amounts of data. While there have been significant advancements in AI, researchers continue to grapple with inherent limitations in data processing. Chief among these challenges is a phenomenon known as the von Neumann bottleneck, which hampers the efficiency of data operations crucial for machine learning. Recent work by a research team led by Professor Sun Zhong from Peking University’s School of Integrated Circuits offers a promising solution with the introduction of a dual in-memory computing (dual-IMC) scheme.

The von Neumann bottleneck refers to the lag between data transfer and processing speeds within computer architectures. Traditional computing models, particularly those that power AI techniques like neural networks, suffer from inefficiencies due to this bottleneck. In these systems, data must traverse from the storage memory to the processor, leading to time delays and energy waste. As the scale and complexity of datasets continue to expand, these limitations become increasingly pronounced, stifling the capabilities of AI and machine learning.

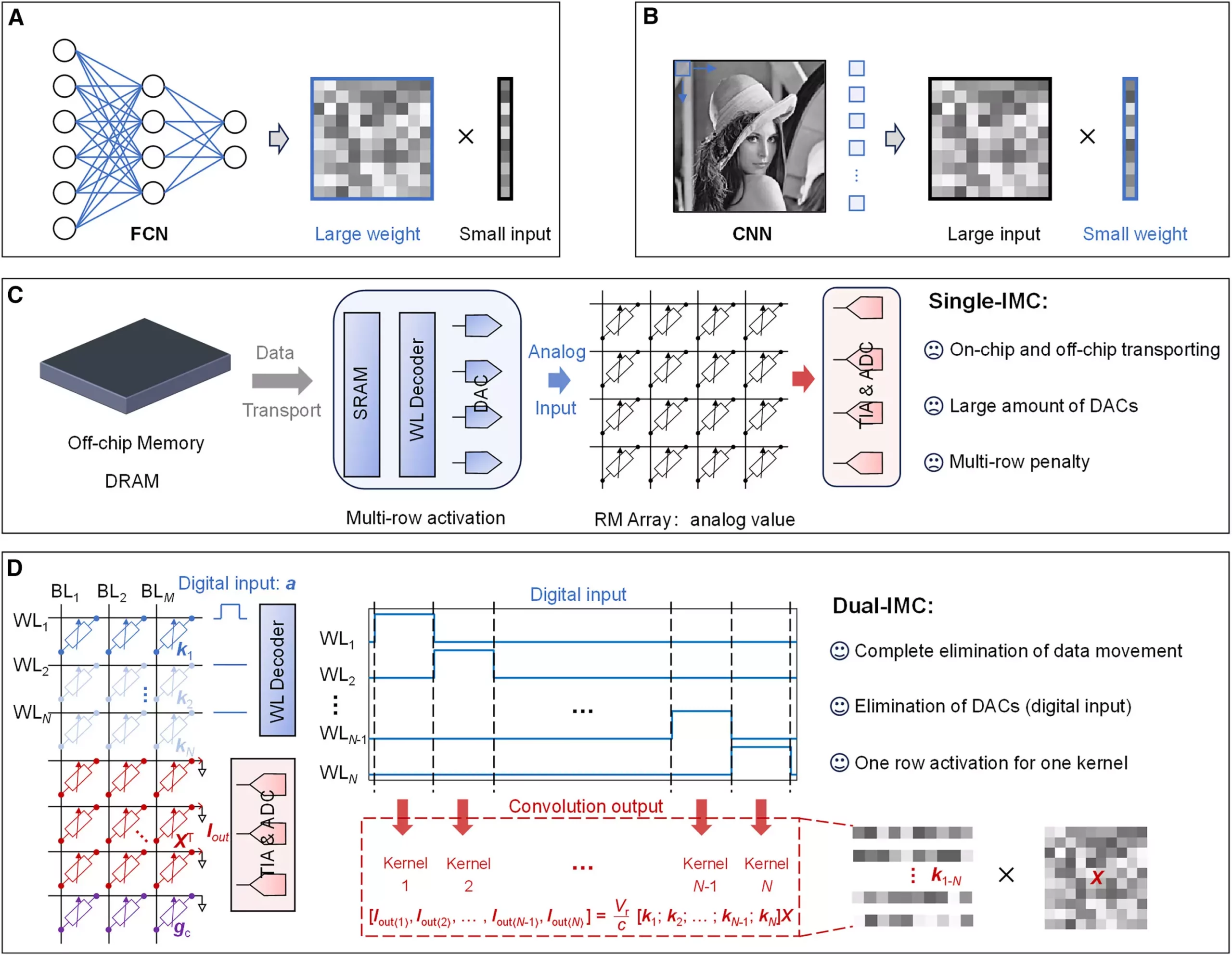

To contextualize the significance of this bottleneck, it’s important to consider how traditional algorithms, particularly those involving matrix-vector multiplication (MVM), operate. MVMs are pivotal for facilitating computations within neural networks. However, the dual operations—where weights are stored in memory chips and inputs are fed from external sources—heighten the risk of inefficiencies and slow downs due to constant switching between memory and processing units.

The pioneering research from Professor Zhong and his team proposes a refreshing departure from conventional strategies by adopting a dual-IMC framework. Unlike traditional single in-memory computing models, the dual-IMC approach stores both the input and the weights of the neural networks within the same memory array. This configuration enables computations to be performed entirely in-memory, mitigating the need for constant data movement between various storage types.

By testing the dual-IMC scheme on resistive random-access memory (RRAM) devices, the researchers were able to demonstrate practical applications in tasks like signal recovery and image processing. The use of RRAM for the dual-IMC architecture not only enhances computational speed but also significantly reduces the associated energy consumption—key factors in modern computing environments where efficiency is paramount.

The impact of employing a dual-IMC scheme is multifaceted, presenting remarkable benefits across both performance and cost metrics. First, the complete in-memory computation facilitates far greater efficiency than existing models, which often rely on off-chip dynamic random-access memory (DRAM) or on-chip static random-access memory (SRAM). This transition cuts down on time delays linked to memory access and vastly improves processing speeds.

Further advantages include lower production costs. The elimination of digital-to-analog converters (DACs)—which are essential for the single-IMC approach—means less circuitry is required, resulting in more compact designs with reduced power requirements. Moreover, with easier integration into existing architectures, the dual-IMC framework paves the way for more energy-efficient computing and could significantly lower the computational latency inherent in traditional setups.

As our reliance on data-driven technologies continues to grow exponentially, breakthroughs such as the dual-IMC scheme signal a critical step forward in addressing the limitations imposed by conventional computing architectures. By effectively neutralizing the von Neumann bottleneck, Professor Zhong’s work may very well set the stage for a new era in artificial intelligence and machine learning where rapid data processing and energy efficiency go hand in hand.

The implications of this research extend beyond immediate technical enhancements; they suggest a paradigm shift in our approach to computing design. Moving forward, the dual-IMC technology could revolutionize not only AI modeling but also a wide array of applications across various industries. As researchers continue to innovate, the primary focus will remain on leveraging these advances to fuel future developments in AI and computing technology, paving the way for transformative solutions in the digital landscape.